🤖 Quantization in AI: Smaller, Faster, and More Efficient Models

The increasing demand for AI-powered devices, such as smartphones, smart home devices, and autonomous vehicles, has created a need for efficient machine-learning models that can run on limited computational resources.

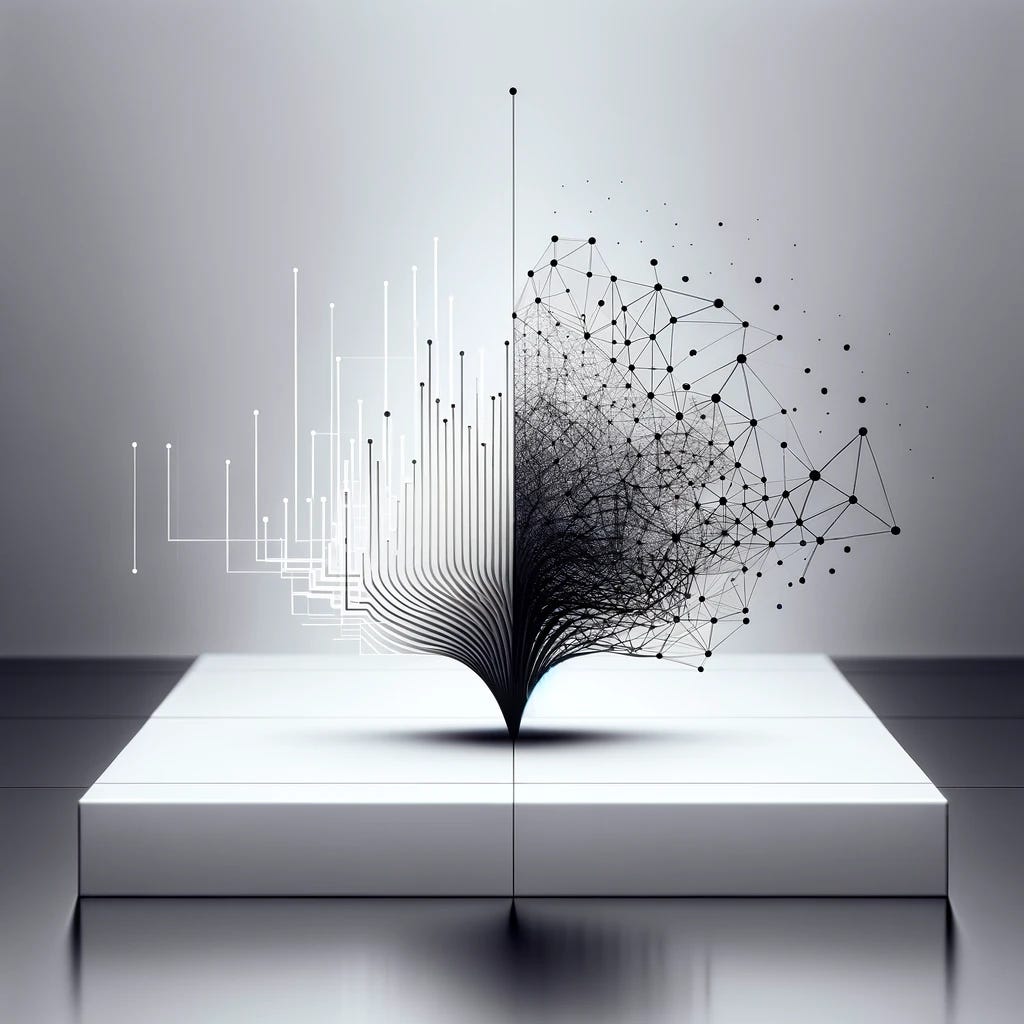

Quantization in artificial intelligence, particularly within machine learning and neural network models, refers to reducing the precision of the numbers used to represent model parameters.

This technique is predominantly utilized to compress machine learning models and accelerate their inference and training processes.

It makes them more suitable for deployment on devices with limited computational resources, such as mobile phones or embedded systems.

📚 Definition and Purpose

Quantization involves converting a continuous range of values into a finite range of discrete values.

In AI, this often means converting 32-bit floating-point numbers, typically used in model weights and activations, into lower-precision formats like 16-bit or even 8-bit integers.

The primary goal is to reduce the computational resource requirements, including memory and power, without significantly compromising the model's accuracy or performance.

For example, consider a facial recognition model deployed on a smart doorbell. The model must process images quickly and efficiently to recognize faces and grant access. By quantizing the model, it can run on the doorbell's limited hardware, reducing latency and improving overall performance.

🔄 Process

Model Training: Initially, the model is trained using high-precision (usually 32-bit floating-point) parameters.

Quantization: After training, the model parameters and sometimes the activations are converted from floating-point representation to lower-precision integer representation.

✅ Benefits include reduced model size, increased inference speed, and better energy efficiency

⚖️ Challenges

Accuracy Trade-off: Quantization can lead to a loss of information, potentially degrading the model's performance, especially if the precision reduction is significant.

To mitigate this, developers can use techniques such as quantization-aware training, which involves training the model with simulated quantization noise to improve its robustness to precision reduction.

Additionally, some models may be more amenable to quantization than others, depending on their architecture and the data type they process.